As you read this, your computer or phone is processing tons of information at very high speeds, and this process is simpler than you may think.

Consider this: the human brain makes simple comparisons that usually come in the form of “yes†and “no.†Similarly, computers “think†in these basic terms. Inside your computer, microscopic switches called transistors are being flipped on and off right now, and these on and off positions essentially correspond to “yes†and “no,†“true†and “false,†or as computers interpret them, “1†and “0.†These 1s and 0s are the two symbols that represent numeric values in the binary system that computers utilize to process information.

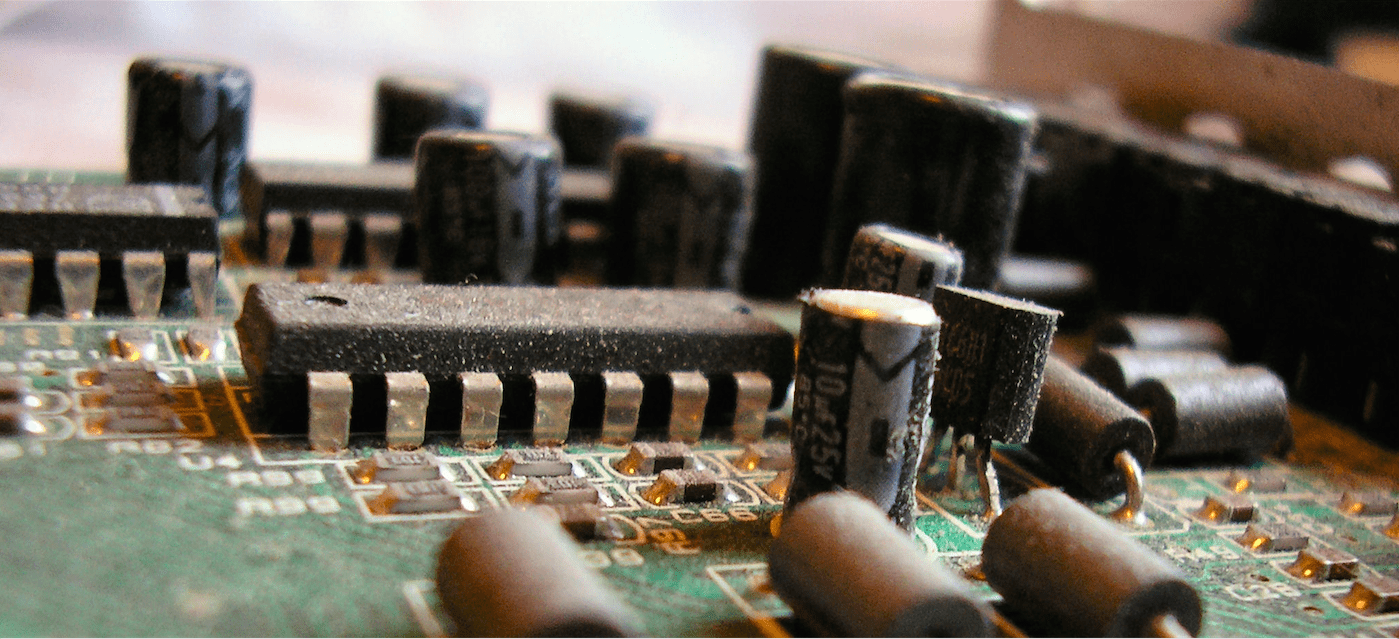

The transistors that represent said 1s and 0s are all organized onto small squares called microchips. The speed that the transistors can flip on and off, known as the frequency, is a determining factor for how fast your computer can go. To produce a super speedy and efficient computer, we have to fit millions of transistors onto the tiny microchips.

For the past few decades, computers have gotten faster and faster as engineers got better at packing smaller and smaller transistors onto tiny microchips. But as transistors get smaller, new problems start to arise.

First, in dealing with such small components, it is extremely difficult to produce reliable and affordable parts. When operating on such a small scale, it is much easier to make mistakes on the chips than if they were larger. Additionally, with microchips becoming smaller and smaller, it is increasingly more difficult for the heat to dissipate away from the chip. To correct for this problem, we can make larger microchips, which can obviously house more transistors, but with more transistors comes more heat! Heat is a major issue in computers because not only can it damage the components (i.e. melting transistors), but it can also cause burns to your skin.

So with all of this talk about our limitations in creating safe, efficient, and small microchips, what is one possible solution currently in development? Quantum computing!

How is quantum computing different?

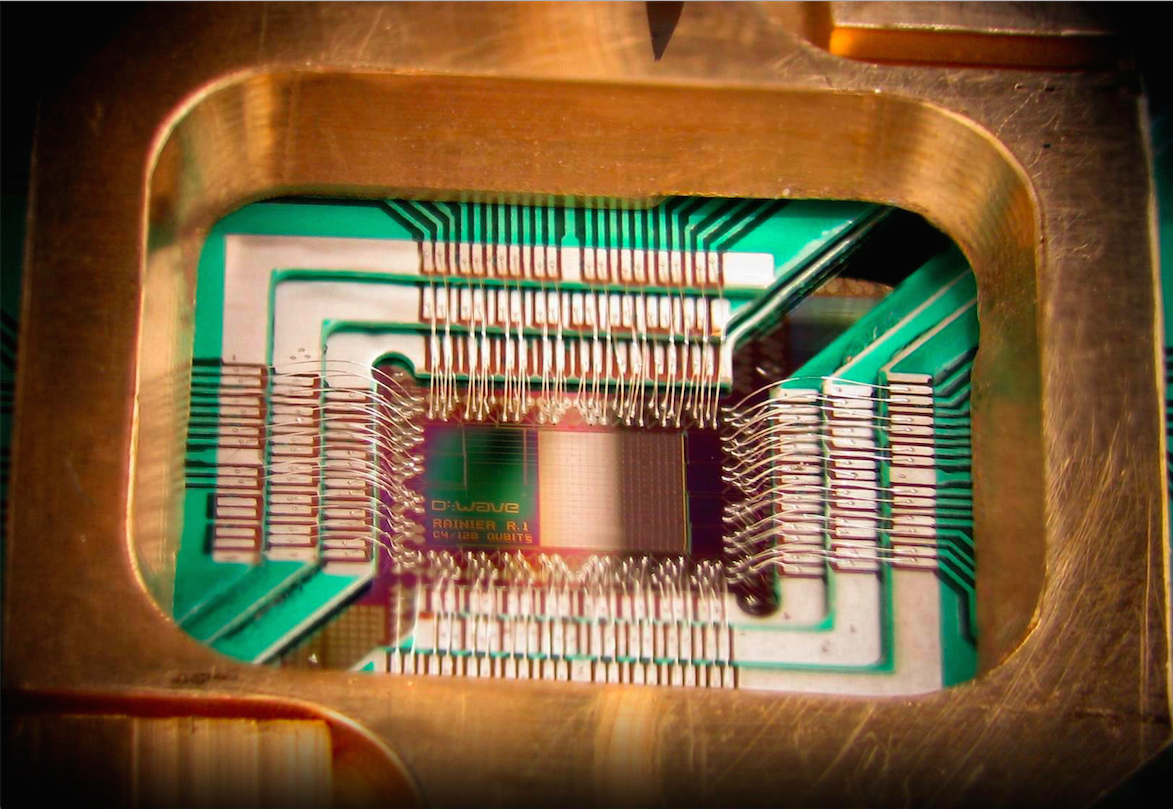

Quantum computing harnesses the power of atoms and molecules to perform tasks a transistor would perform, and it gets rid of the on/off system entirely. Quantum computers encode information as quantum bits, otherwise known as qubits. Symbols that get processed in quantum computers can be 0, 1, or a superposition of 0 and 1 – which means 0, 1, and every number in between (at the same time)! Due to superposition, processors in these quantum computers will be able to perform calculations with all possible values of the qubits simultaneously. Thus, quantum computers can possibly fix the great transistor problem!

Quantum computing harnesses the power of atoms and molecules to perform tasks a transistor would perform.

This simultaneous calculating of qubits is called quantum parallelism, and it is the driving force behind most research carried out in quantum computing. Because quantum computers are not limited to just two states, they have the potential to be millions of times more powerful (and extremely faster) than the computer you are using right now.

Quantum parallelism and superposition come with their own problems and limitations, however. Because of the extreme fragility of quantum states, simply observing a qubit can influence it to randomly choose a definite state (a single number) rather than be in a state of superposition. Outside interactions with the environment (i.e. the mere observation of the data) have a huge impact on quantum data, and this is an issue because measurements and data collection require us to observe the data.

Thus, in order to function, quantum computers must keep qubits strictly isolated from the outside world. With this in mind, we can still exploit the complexity of the quantum state if we can manipulate the qubits without directly observing their properties.

What are the current advancements in quantum computing?

So can we truly build a useful quantum computer? It seems that with quantum mechanics, anything is possible. Over the past three decades, we have made a lot of progress with quantum computer research and production, but we still have a long way to go before we can expect these machines to be on the market for a reasonable price.

Quantum theory was first applied to computers in 1981 by Paul Berioff. Since then, the first 2-qubit quantum computer was created and utilized in 1998; the first quantum byte (qubyte) computer in 2005; the first 12-qubit quantum computer in 2006; and the list goes on as time progresses. For a brief timeline of highlights of advancements in quantum computing, check this out.

One of the most important developments in quantum computing today is the utilization of graphene in the place of silicon. Graphene is a single layer of carbon atoms about 200 times stronger than steel, and it also has extremely unique electronic properties that are useful in quantum computing. (For more information about graphene's interesting properties, check out this podcast by Athens Science Observer's Graham Grable.) With these new improvements in place, some scientists believe that quantum computers will become commercially available within the next 20 years.

Most research conducted today is still highly theoretical due to difficulties in dealing with the extremely fragile and ever changing nature of quantum mechanics. Though we cannot change the inherent properties of nature, we can learn to work with them (and around them). Maybe some day, all cell phones and computers will utilize the power of quantum mechanics! In the mean time, we will just have to wait and see where the research takes us.

About the Author

Paige Copenhaver is an undergraduate studying Physics and Astronomy at the University of Georgia. When she is not studying solar-type stars, she can be found playing ukulele or reading Lord of the Rings. You can email her at pac25136@uga.edu or follow her on twitter: @p_copenhaver. More from Paige Copenhaver. Paige Copenhaver is an undergraduate studying Physics and Astronomy at the University of Georgia. When she is not studying solar-type stars, she can be found playing ukulele or reading Lord of the Rings. You can email her at pac25136@uga.edu or follow her on twitter: @p_copenhaver. More from Paige Copenhaver. |

About the Author

-

athenssciencecafehttps://athensscienceobserver.com/author/athenssciencecafe/April 17, 2020

-

athenssciencecafehttps://athensscienceobserver.com/author/athenssciencecafe/April 12, 2020

-

athenssciencecafehttps://athensscienceobserver.com/author/athenssciencecafe/April 3, 2020

-

athenssciencecafehttps://athensscienceobserver.com/author/athenssciencecafe/March 30, 2020